NVIDIA released JetPack 3.1, a production Linux software for Jetson TX1 and TX2 . With the upgrade of TensorRT 2.1 and cuDNN 6.0, JetPack 3.1 offers up to 2x deep learning inference for real-time applications such as visual navigation and motion control. These applications can benefit from Batch Acceleration 1. The improved features allow Jetson to deploy more powerful intelligence for a generation of automated machines, including delivery robots, telepresence, and video analytics. In order to further promote the development of robotics, NVIDIA's recently launched Isaac Initiative is an end-to-end platform for training and deploying advanced AI on the spot.

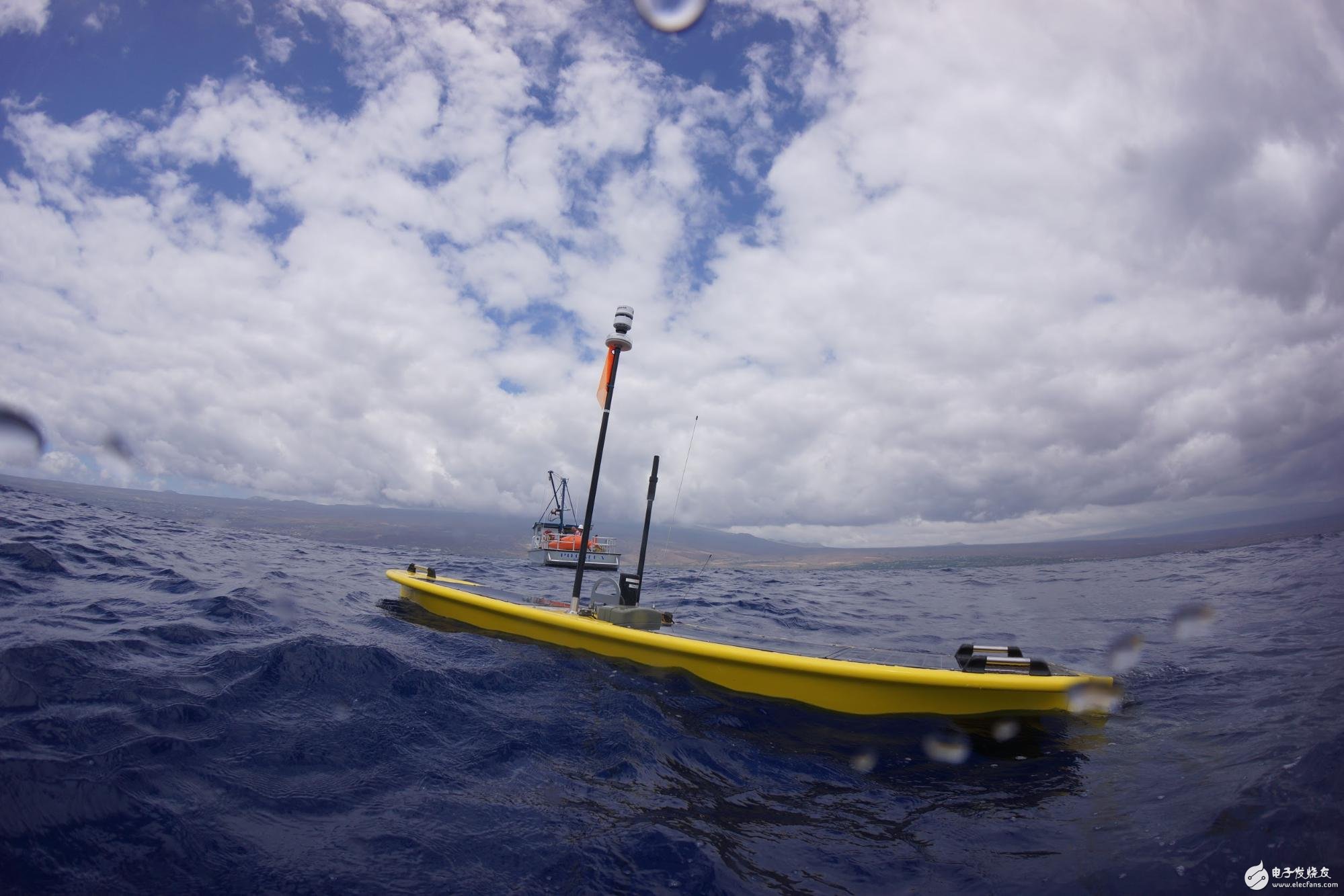

Figure 1. Liquid Robotics' regenerative and solar wave gliders can work with Jetson to autonomously cross oceans for low-power visual and artificial intelligence processing.

Figure 1. Liquid Robotics' regenerative and solar wave gliders can work with Jetson to autonomously cross oceans for low-power visual and artificial intelligence processing.

When NVIDIA introduced the Jetson TX2 , the de facto edge computing platform gained significant improvements. As shown in the Wave Glider platform in Figure 1, remote IoT devices at the edge of the network often experience reduced network coverage, latency, and bandwidth. While IoT devices are often used as gateways to forward data to the cloud, edge computing rethinks the possibility of IoT access to secure onboard computing resources. NVIDIA's Jetson embedded modules provide 1 TFLOP / s of server-class performance on the Jetson TX1 and double the AI ​​performance on Jetson TX2 with less than 10W power consumption.

JetPack 3.1JetPack 3.1 with Linux for Tegra (L4T) R28.1 is a production software version of Jetson TX1 and TX2 with Long Term Support (LTS). The L4T Board Level Support Package (BSP) for TX1 and TX2 is suitable for customer productization, and its shared Linux kernel 4.4 code base provides compatibility and seamless portability between the two. Starting with JetPack 3.1, developers can access the same library, API, and tool versions on TX1 and TX2.

Table 1: Package Versions Included in JetPack 3.1 and L4T BSP for Jetson TX1 and TX2. NVIDIA JetPack 3.1 - Software Components Linux Tegra R28.1 Ubuntu 16.04 LTS aarch64 CUDA Toolkit 8.0.882 cuDNN 6.0 TensorRT 2.1 GA GStreamer 1.8.2 VisionWorks 1.6 OpenCV4Tegra 2.4.13-17 Tegra System Analyzer 3.8 Tegra Graphics Debugger 2.4 Tegra Multimedia API V4L2 Camera/Codec APIIn addition to upgrading from cuDNN 5.1 to 6.0 and maintaining updates to CUDA 8, JetPack 3.1 also includes the latest visual and multimedia APIs for building streaming media applications. You can download JetPack 3.1 to your host to flash Jetson using the latest BSPs and tools .

Low Delay Reasoning Using TensorRT 2.1JetPack 3.1 contains the latest version of TensorRT, so you can deploy optimized runtime deep learning inference on Jetson. TensorRT optimizes network graphs, and core fusion and half-precision FP16 support improve inference performance. TensorRT 2.1 includes key features and enhancements such as multiple ingredients, further enhancing the deep learning performance and efficiency of Jetson TX1 and TX2, and reducing latency.

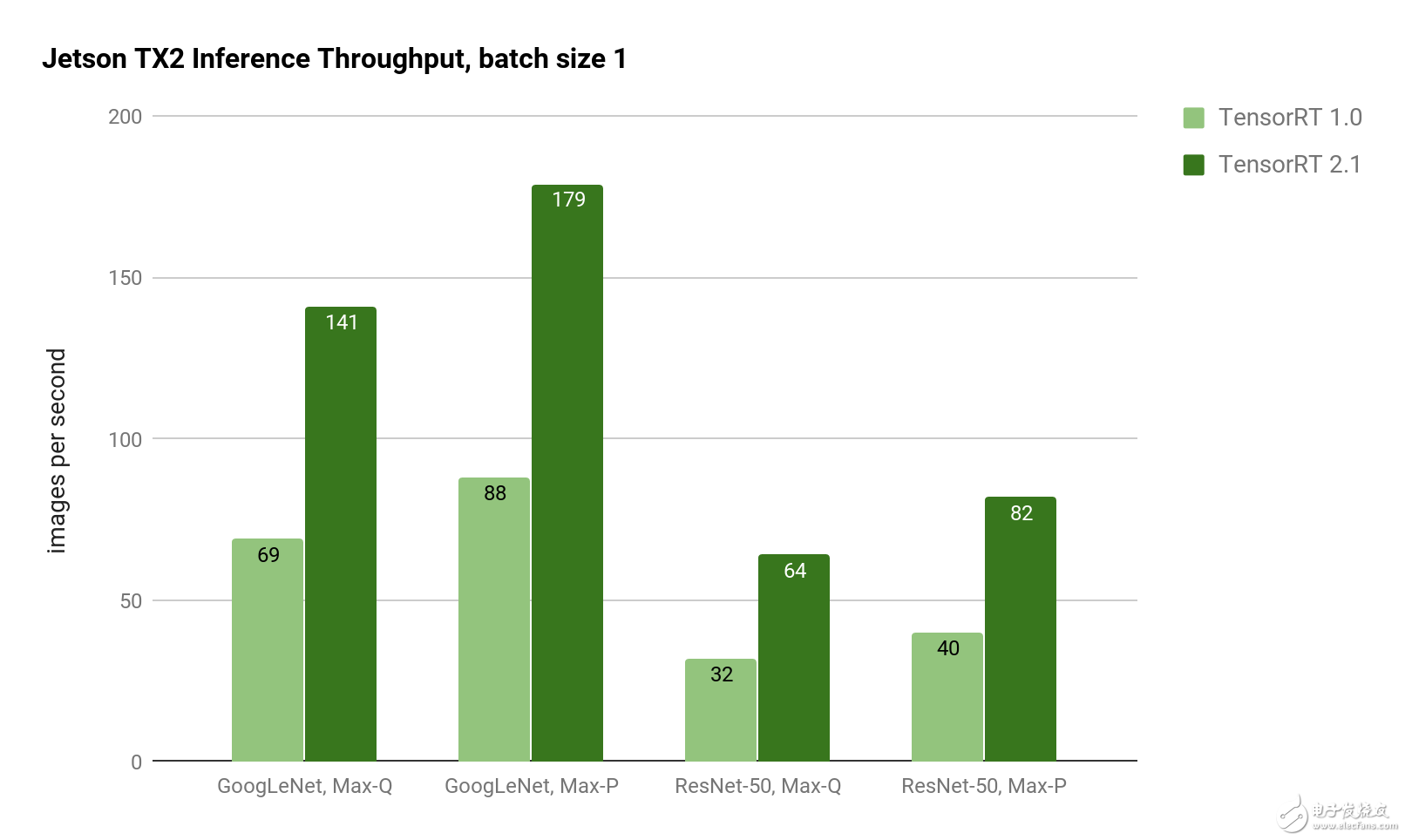

Batch size 1 performance is significantly improved, leading to GoogLeNet's latency down to 5 milliseconds. For latency-sensitive applications, batch size 1 provides the lowest latency because each frame is processed when it arrives at the system (instead of waiting for multiple frames to be processed in batches). As shown in Figure 2 on the Jetson TX2, using TensorRT 2.1 achieves twice the TensorRT 1.0 throughput for GoogLeNet and ResNet image recognition inference.

Figure 2: Inference throughput for GoogLeNet and ResNet-50 using the Jetson TX2 Max-Q and Max-P power curves. TensorRT 2.1 provides twice the inference throughput on Google's LeNet and ResNet.

Figure 2: Inference throughput for GoogLeNet and ResNet-50 using the Jetson TX2 Max-Q and Max-P power curves. TensorRT 2.1 provides twice the inference throughput on Google's LeNet and ResNet.

The wait time in Table 2 shows the scale reduction when the batch size is 1. For Genson TX2, the Jetson TX2 achieves a 5ms delay in the Max-P performance curve and 7ms delay in the Max-Q efficiency curve. ResNet-50 has a 12.2ms delay in Max-P and a 15.6ms delay in Max-Q. ResNet is often used to improve image classification accuracy beyond GoogLeNet, and using TensorRT 2.1 can improve runtime performance by more than 2 times. With the 8GB memory capacity of the Jetson TX2, mass production up to 128 can be achieved even on complex networks like ResNet.

Table 2: Jetson TX2 deep learning inferred delay measurements comparing TensorRT 1.0 and 2.1. (The lower the better.) To accelerate network latency TensorRT 1.0 TensorRT 2.1 GoogLeNet, Max- Q 14.5ms 7.1ms 2.04x GoogLeNet, Max-P 11.4ms is 5.6ms 2.04x ResNet-50, Max- Q 31.4ms 15.6ms 2.01x ResNet-50, Max-P 24.7ms 12.2ms 2.03xThe reduced delay allows deep learning inference methods for applications that require near real-time response, such as collision avoidance and autonomous navigation on high-speed drones and ground vehicles.

Custom layerWith user plug-in APIs to support custom network layers, TensorRT 2.1 is able to run the latest networks and features with extended support including ResNet, Recurrent Neural Networks (RNN), YOLO and faster-RCNN at a time. Custom layers are implemented in user-defined C++ plugins that implement IPlugin 's interface in the following code .

#include "NvInfer.h" using the namespace nvinfer1;class MyPlugin:IPlugin{list: int getNbOutputs()const; Dims getOutputDimensions(int index,const Dims * inputs, int nbInputDims); void configure(const Dims *inputDims,int nbInputs , const Dims * outputDims, int nbOutputs, int maxBatchSize); int initialize(); void terminate(); size_t getWorkspaceSize(int maxBatchSize) const; int enqueue(int batchSize, const void * inputs, void ** outputs, void *work Area, cudaStream_t stream); size_t getSerializationSize(); void serialize(void * buffer); Protect: Virtual ~ MyPlugin(){}};You can build your own shared objects using IPlugin 's custom definitions similar to the above code . In () function enqueue internal users, you can use the CUDA kernel implements custom processing. TensorRT 2.1 uses this technique to implement the Faster-RCNN plug -in for enhanced object detection . In addition, TensorRT provides a new RNN layer for long-term short-term memory (LSTM) units and gated cycling units (GRU) to improve memory-based recognition of time series sequences. Delivering these powerful new layer types out of the box speeds the deployment of advanced deep learning applications in embedded edge applications.

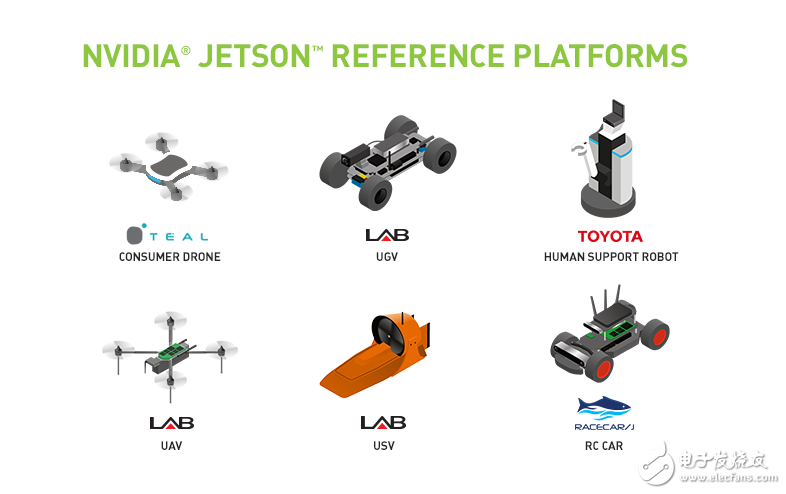

Figure 3: Robot reference platform for AI development provided with Jetson.

Figure 3: Robot reference platform for AI development provided with Jetson.

With the rapid increase in artificial intelligence capabilities, NVIDIA launched the Isaac initiative to advance advanced technologies in the field of robotics and artificial intelligence. Isaac is an end-to-end robotic platform for the development and deployment of intelligent systems, including simulation, autonomous navigation stacks, and embedded Jetson for deployment. To begin developing autonomous AI, Isaac supports the robot reference platform shown in Figure 3 . These Jetson-powered platforms include drones, unmanned ground vehicles (UGVs), unmanned ground vehicles (USVs), and human assisted robots (HSRs). The reference platform provides a foundation of Jetson drivers that can be experimented in the field and the plan will be expanded over time to include new platforms and robots.

JetPack 3.1 includes cuDNN 6 and TensorRT 2.1. It is now available for Jetson TX1 and TX2. With the low latency performance of single-batch inference and support for new networks with custom layers, the double Jetson platform is more capable than ever before for edge computing. To start developing artificial intelligence, see our two-day demo series of training and deployment of deep learning visual primitives such as image recognition, object detection, and segmentation. JetPack 3.1 greatly improves the performance of these deep visual primitives.

Withstand high voltage up to 750V (IEC/EN standard)

UL 94V-2 or UL 94V-0 flame retardant housing

Anti-falling screws

Optional wire protection

1~12 poles, dividable as requested

Maximum wiring capacity of 4 mm2

Feed Through Terminal Block,T12 Series Terminal Blocks,Terminal Strips Connector,Cable Connectors Block

Jiangmen Krealux Electrical Appliances Co.,Ltd. , https://www.krealux-online.com