Recently, there have been a lot of events in the digital circle. Most of the spectators have been attracted by Apple. I have been watching the NVIDIA and Intel's war of words and the fight behind them.

Big data, artificial intelligence into a giant to fight for the toon

With the explosive growth of smart hardware devices, the proliferation of data is beyond your imagination. What are these data used for? Every company that uses intelligent hardware devices expects that product data can be maximized and analyzed and processed to help users further improve their intelligence. This series of demands is not only required for smart wrist watch, but also the future of smart home, driverless, and robot.

So as the basis of artificial intelligence, deep learning, etc., how to match the processor, GPU and algorithm in order to get the market initiative to win the future ahead, this is what Intel and NVIDIA are doing.

In the IDF just held last month, Intel has directly directed the theme of the conference to the hotspots of big data, artificial intelligence and deep learning. A few big acquisitions have also seen Intel's determination. The problem is that NVIDIA won't make Intel comfortable, and it has a lot of action in this area. NVIDIA is really nervous about Intel.

NVIDIA advertises that GPUs crush CPUs in deep learning

Why did NVIDIA and GPUs that used to be GPUs in the past do not make rivers and waters, and they can cooperate happily, but now they are more and more tit-for-tat. This is mainly because the hardware relied on in the field of big data processing, artificial intelligence, and deep learning is different from traditional computers.

According to NVIDIA, the efficiency of GPU processing in deep learning is several times that of CPU, and even the Xeon processor that Intel is proud of. In the field of artificial intelligence, the role of chip processing performance and algorithm optimization can be said to account for 50% each, but at the chip level, the industry's common understanding is that GPUs have far more advantages in artificial intelligence and deep learning algorithms than CPUs. It is for this reason that NVIDIA is able to gain momentum in artificial intelligence and deep learning.

In general, the GPU faces the CPU, and there are four main advantages: 1. The GPU is inherently optimized for parallel computing, while the CPU is inherently optimized for serial instructions. Artificial intelligence requires more powerful parallelism. 2. Under the same chip area, more computing units can be integrated on the GPU. 3. The power consumption of the GPU is much lower than that of the CPU. 4, GPU has a larger capacity storage structure, has a cache advantage for a large amount of data.

In the first half of the year, NVIDIA just launched the Tesla P100 GPU for Deep Neural Networks and developed the deep learning supercomputer NVIDIA DGX-1 based on this. Judging from the photos exposed by the media, Huang Renxun personally handed the name of the DGX-1 supercomputer to Musk. The prototype of Iron Man, the CEO of Tesla and SpaceX. This is the label we know about Musk. In fact, he is also the team leader of the OpenAI project. This team is working on artificial intelligence development, but Musk is using this new NVIDIA super Why are computers, we don’t know.

NVIDIA and IBM team up to deal with Intel

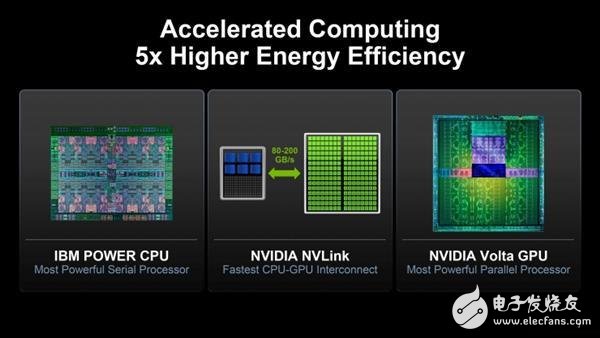

Intel has always been a big player in the server space, and there is no doubt that IBM Power and ARM have only a small share. But to the point of artificial intelligence and deep learning, it seems that Intel's advantage disappeared immediately. And IBM has launched several new servers with NVIDIA! These products are aimed at artificial intelligence, according to IBM officials, which is five times faster than other platforms in data processing speed, compared to Intel's x86 servers. The average performance can be 80% higher.

In addition to the Power processor's own parallelism, which helps with data partitioning and multi-process processing when supporting big data operations, this has some advantages over Intel x86 architecture processors. In addition, Power is particularly efficient in the collaborative work with NVIDIA's Tesla P100. I will not elaborate on the technical details. The main reason is that the Power processor can support the NVLink port well. Up to 40GB/s, more efficient than the current x86-used PCI-E (16GB/s only), making it more efficient to exchange data between Power processors and NVIDIA GPUs. The migration of interfaces from PCI-E to NVLink alone can achieve a 14% performance improvement.

To put it bluntly, it is very likely that the two will join forces to bring Intel down in the new market. So Intel can't be anxious, it can't be nervous.

Intel says deep learning is only one aspect

In the face of the NVIDIA and IBM giants step by step, Intel can not help. Whether it is from the recent IDF or from the media communication link, Intel has repeatedly stated that deep learning is only a part of artificial intelligence. On one aspect, that cannot represent all.

In deep learning, Intel did encounter NVIDIA this enemy, then where is Intel's advantage? Comprehensive technology precipitation! Yes, dedicated to end-to-end comprehensive solution, this is Intel's counterattack.

At present, Intel from the data-aware source (RealSense real-world technology, etc.), data storage (Xpoint non-volatile storage technology), data transmission (next generation 5G network), cloud services and back-end data analysis processing (server, Xeon Processor, etc.), which is a complete closed loop, is able to provide users with end-to-end comprehensive services, which is difficult to achieve in the short-term implementation of NVIDIA, which has only been established in the field of deep learning. Then Intel will make good use of the comprehensive end-to-end service layout, and more slowly fill the gap for relatively passive deep learning.

Intel's big acquisition of gambling future

Since last year, Intel has started the three major acquisitions of artificial intelligence. The three acquisition cases can be said to be the big news that shakes the industry. First, Altera was acquired as a programmable processing chip for $16.7 billion last year. This acquisition became the largest acquisition in the history of Intel. Through the acquisition of Altera's programmable processing chip, Intel has successfully achieved a bonus in big data computing processing, and plans to introduce a new Xeon Phi series processor, which will target big data. High performance computing and artificial intelligence.

This year, Intel has completed the acquisition of nervana and Movidius, a deep learning chip startup company, Intel hopes that Nervana will effectively improve the performance of intelligent fusion processor in AI.

With the acquisition of Movidius, it seems that Intel acquired a manufacturer that is known for VR and AR, but in fact they are mainly dedicated to 3D visual computing, which coincides with Intel's RealSense 3D real-world technology. Many people pay attention to VR, AR, network layout and server layout in the era of big data, but few people pay attention to the value of RealSense technology, which is equivalent to giving smart devices a pair of eyes. In the future, many data sources of smart devices will come from similar sources. Visual equipment. Obviously Intel has already done a good job in this area.

NVIDIA's license to Intel is about to expire

In fact, in addition to the ingenuity in artificial intelligence, Intel and NVIDIA have also experienced cracks in past cooperation. As early as 2011, Intel had dealt with the court with NVIDIA because of patent disputes. Finally, Intel decided to obtain NVIDIA related graphics technology license for $1.5 billion, and asked NVIDIA not to enter the x86 server market. The lawsuit was closed. It is reported that the agreement will officially expire on March 17, 2017, which means that Intel will regain its free choice, and it is entirely possible to terminate cooperation with NVIDIA on consumer and server products, and then fully cooperate with AMD. And with NVIDIA's server business booming, it seems that it is not impossible to get involved in the x86 architecture.

Intel seems to be in close contact with AMD

Some recent signs do indicate that Intel and AMD are getting closer and closer. It is rumored that AMD has recently transferred some of its staff from the GPU business to a separate working group, which includes software and hardware experts. Asset business professionals. This team is most likely a full-time team that is connected to Intel. Since Intel's CPU does not have any NVIDIA core patents, it is not impossible for Intel to sign a contract with AMD.

For several years, the love and hate of Intel, NVIDIA, and AMD have always been a major attraction in the technology circle. Although the development of science and technology has come to the era of big data and is moving towards the era of artificial intelligence in the future, this series of love triangles still applies, and will continue to be staged. About NVIDIA and Intel's artificial intelligence, and the subtle relationship between AMD, we will continue to pay attention to Tencent Digital.

Multi-function, multi-device charging solution - With a large 20,000 mAh built-in battery, the mophie Powerstation XXL High Capacity mobile Power supply charges your phone super fast and can fully charge your phone up to 4.6 times. Charge three devices simultaneously

Charge Your Devices Fast - With 18W USB-C power output, charge your phone the fastest and get up to 50% battery power in just 30 minutes. The USB-C port can be used to charge the power station itself during recording hours.

Compatible with various gadgets - In addition to the USB-CpD port, the Portable Portable Power Supply also comes with 2 USB-A ports for charging smartphones, tablets, and other USB devices. The package includes a charging cable (USB-A to USB-C).Functional and stylish - With a classic fabric finish that prevents scuffs and scratches, this mobile power supply is your perfect travel companion. Easily fit in your bag, so you can carry it to work or on adventures without a separate charger

Its main components include: a battery for electrical energy storage, a circuit (DC-DC converter) to stabilize the output voltage, and most mobile power supplies come with a charger for charging as a built-in battery.

100W Power Bank,power bank price,portable phone charger,power bank 30000mah

suzhou whaylan new energy technology co., ltd , https://www.nbwhaylan.com