The camera is the ADAS core sensor. Compared to millimeter wave radar and lidar, the biggest advantage is the identification (what is the car or the person, the color of the sign). The price of the automotive industry is sensitive, and the hardware cost of the camera is relatively low. Because computer vision has developed rapidly in recent years, the number of startups that have been cut into ADAS from the perspective of the camera is also very impressive.

This article refers to the address: http://

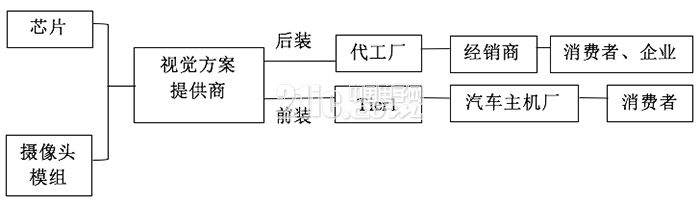

These startups can be collectively referred to as visual solution providers. They master the core vision sensor algorithms and provide downstream customers with complete solutions including on-board camera modules, chips and software algorithms. In the pre-installation mode, the visual solution provider plays the role of a secondary supplier, working with Tier1 to define products for OEMs. In the post-installation mode, in addition to providing a complete set of equipment, there is also a model for selling algorithms. In this article, the visual ADAS function, hardware requirements, evaluation criteria, etc. will be analyzed. In the content of [[Cyun Yun Report] adas visual program entry level (the next part)", Mobileye will explain the products of 11 domestic suppliers in detail. .

Visual ADAS Supply Chain System 1. Visual ADAS can realize functions

Because of the need for safe recording, parking, etc., a large number of applications of the camera in the car are auxiliary functions such as driving recorder and reversing image. Generally, images are collected by wide-angle cameras installed in various positions of the vehicle body, and are subjected to calibration and algorithm processing to generate images or splicing to form a view to supplement the driver's visual blind zone, without involving the whole vehicle control, so more attention is paid to video processing, and the technology is mature and gradually popularized.

Currently, in the driving assistance function, the camera can be used to implement many functions individually, and gradually evolves according to the law of automatic driving development.

These functions emphasize the processing of the input image, extract the effective target motion information from the captured video stream for further analysis, give early warning information or directly mobilize the control mechanism. Compared with the video output function, it emphasizes the real-time performance under high speed, and this part of the technology is in the period of development.

Second, visual ADAS soft and hard needs

The visual system ADAS products are composed of software and hardware, mainly including camera module, core algorithm chip and software algorithm. The hardware aspect considers the driving environment (vibration, high and low temperature, etc.), the main premise is to meet the requirements of the car standard.

(1) Car ADAS camera module

Car ADAS camera modules require customized development. In order to meet the needs of the vehicle all-weather all day, it is generally necessary to meet the situation where the contrast between light and dark is too large (in and out of the tunnel), to balance the excessively bright or too dark part of the image (wide dynamic); to be sensitive to light (high sensitivity), Avoid putting too much pressure on the chip (not just chasing high pixels).

The camera module is the foundation. Just like a good photo has a landscaping, to ensure that the image is sufficient, the algorithm can be more effective.

In addition, in terms of parameters, ADAS and driving recorders have different requirements for cameras. The camera used for the driving recorder needs to see as much environmental information as possible around the front of the car (the rear view mirror position looks at the two front wheels, and the horizontal viewing angle is about 110 degrees). The camera of ADAS pays more attention to reserve more judgment time when driving, and needs to look farther. Similar to the camera lens wide-angle and telephoto, the two parameters can not be combined, ADAS can only take the balance when the hardware is selected.

(2) Core algorithm chip

Image correlation algorithms have high requirements for computing resources, so the performance of the chip is particular. If deep learning is superimposed on the algorithm to help improve the recognition rate, the requirements for hardware performance will only increase. The main performance indicators are the operation speed, power consumption, and cost.

Most of the chips currently used for ADAS cameras are monopolized by foreign countries. Major suppliers include Renesas Electronics, STMicroelectronics, Freescale, Analog Devices, and Texas Instruments. ), NXP, Fujitsu, Xilinx, NVIDIA, etc., provide chip solutions including ARM, DSP, ASIC, MCU, SOC, FPGA, GPU.

ARM, DSP, ASIC, MCU, and SOC are embedded solutions for software programming. Because FPGA directly programs hardware, it processes faster than embedded.

GPU and FPGA have parallel processing capabilities. Text such as images, especially when using deep learning algorithms requires multiple pixels to be simultaneously calculated, FPGAs and GPUs will be more advantageous. The design of the two types of chips is similar, in order to deal with a large number of simple and repetitive operations. The performance of the GPU is stronger but the energy consumption is higher. Because the FPGA is programmed and optimized directly at the hardware level, the energy consumption will be much lower.

Therefore, FPGAs are considered a popular solution when balancing algorithms and processing speeds, especially for preloading and algorithm stabilization. FPGA is a good choice. But at the same time, FPGAs are also very technically demanding. The reason is that the computer vision algorithm is C language, the FPGA hardware language is verilog, and the two languages ​​are different. The person who transplants the algorithm to the FPGA must have both a software background and a hardware background. In today's most talented people, it is not a small cost.

At this stage, there are many options for the car-level chip that can be used for the traditional computer vision algorithm, but the low-power high-performance chip that is suitable for the traditional algorithm superposition deep learning algorithm has not really appeared.

(three) algorithm

The source of the ADAS vision algorithm is computer vision.

Traditional computer vision recognition objects can be roughly divided into image input, preprocessing, feature extraction, feature classification, matching, and completion identification.

There are two places that rely especially on professional experience: the first is feature extraction. There are many features available when identifying obstacles, and feature design is especially critical. Determine whether the obstacle in front is a car, the reference feature may be the taillights, or the shadow of the vehicle chassis on the ground. The second is pre-processing and post-processing. The pre-processing includes smoothing of input image noise, contrast enhancement, and edge detection. Post-processing refers to reprocessing the classification recognition result candidates.

The application of computer vision algorithm models in scientific research to the actual environment does not necessarily perform well. Because the algorithms derived from scientific research increase the conditional constraints such as weather and road complexity, in reality, in addition to focusing on the performance of complex environments, the robustness of the algorithm in various environments (whether stable) is also considered.

One of the more important changes in the algorithm is the penetration of deep learning.

Deep learning allows the computer to simulate the neural network of human thinking, and can learn and judge by itself. By inputting the calibrated raw data directly to the computer, such as picking up a bunch of alien car pictures, and then throwing it to the computer to let it learn what a car is. In this way, the steps of visual feature extraction and preprocessing can be eliminated, and the sensing process can be simplified into two steps of inputting an image-outputting result.

A more consistent view in the industry is that in terms of perception, deep learning will bend overtaking traditional visual algorithms. At present, the algorithm model of deep learning has been open source, and there are not many types of algorithms, so there is a possibility that a large number of excellent results are lowered. However, it is limited by the lack of a suitable platform for the car, and there is still a distance from the product.

The industry's views on deep learning in ADAS applications are more objective and calm. Many people believe that the deep learning algorithm is a black box algorithm, which is similar to the process of human perceptual decision making. It can output a result very quickly. It is difficult to check the cause after an accident, so you should add the rational decision part when using deep learning. And partition block design.

Three-Phase Induction Motor,Automatic Rail Motor,Multiple Phase Induction Motor,High Precision Motor

Jiangsu Hengchi Motor Technology Co., Ltd , https://www.hcemotor.com